ECE 254 Notes

2016/05/04

L01: Introduction to Operating Systems

-

OS are the programs that interface between hardware and software

-

responsible for resource management and allocation

-

kernel: the central part of the OS; always loaded in main memory

-

systems programming:

-

file manipulation

-

status information

-

programming-language support

-

communication

L02: Review of Computer Architecture

-

to execute a program we need:

-

main memory

-

system bus

-

processor

-

memory ideally should:

-

fast enough processor never has to wait

-

large enough to hold all data

-

inexpensive

-

that doesn’t work in real life – have a memory hierarchy where different types are optimized in certain ways

L03: Operating System Structure and Traps

-

layered OS: clearly defined areas (e.g. interfaces between hardware and kernel, kernel and user space)

-

modules: layers are divided into modules (e.g. module for device driver, scheduling, file system)

-

can be static or modular (loadable and swappable)

Trap

-

software-level interrupt is a trap (also known as exception)

-

usually generated by an error

-

also used to “wake up” the OS - when a program needs the OS to do something, it interrupts and the interrupt handler (part of the OS) does its thing

-

when this happens, mode bit of the processor is set to supervisor mode (as opposed to user mode)

Motivation for Dual-Mode Operation

-

protect system against dumb programs/user

Invoking a System Call

-

user program pushes arguments onto stack

-

invokes system call

-

sys call puts its ID in the designated location

-

sys call issues

trap

-

OS responds to interrupt and examines ID

-

OS runs the sys call handler that matches ID

-

when handler finished, control exists kernel and goes back to sys call (in user mode)

-

sys call returns control to user program

2016/05/09

L04: Processes

-

process:

program in execution; has three things:

-

instructions and data of program (compiled executive)

-

current state of the program

-

any resources needed to execute the program

-

PCB: Process Control Block

-

data structure used for managing processes

-

contains everything OS needs to know about process

-

usually contains:

-

identifier: unique ID (pid)

-

state

-

priority

-

program counter: location to store next instruction address (if needed)

-

register data: place to store current value of registers (if needed); context data

-

memory pointers: pointers to code and data associated with process; any memory the OS has allocated by request

-

I/O status information: outstanding requests, files, or I/O devices currently assigned

-

accounting information: data about process’ use of resources

Process Creation

-

certain events that lead to a process creation:

-

system boot up

-

user requests to start a new process

-

parent process spawns child

-

OS can spawn visible or background processes (daemons in unix)

Process Destruction:

-

happens in four ways:

-

normal exit (voluntary)

-

error exit (voluntary)

-

fatal error (involuntary)

-

killed by another process (involuntary)

Process Family Tree

-

process group: a process and all its descendants

-

signals can be sent to entire process group

-

in UNIX,

init

started first, parent of all processes; UNIX processes can be structured as a tree

-

processes return an exit code

-

when a child finishes, if the parent doesn’t collect its return code, it sits around as a

zombie

-

PCB and resources not cleaned up

-

if parent process dies before child, the child is an

orphan

-

automatically adopted by

init

in unix

-

in Windows, parents can give away their children (there isn’t really a hierachy)

-

in UNIX, you cannot give away your child

L05: Process State

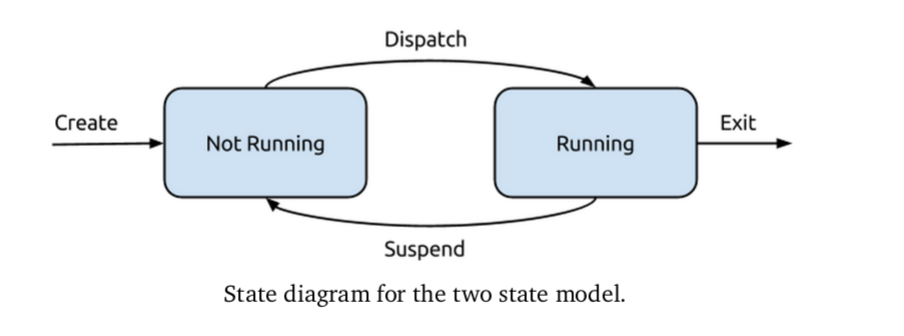

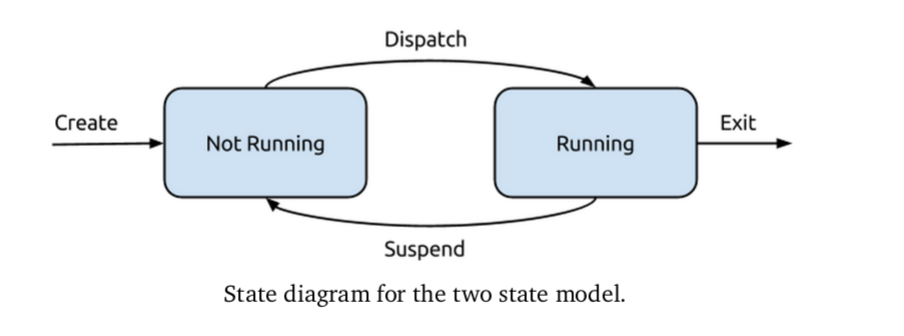

Two State Model

-

possible states:

-

running: actively executing

-

not running: not currently executing

-

when PCB created, state is

not running

-

whenever process switch happens, current process is set as

running

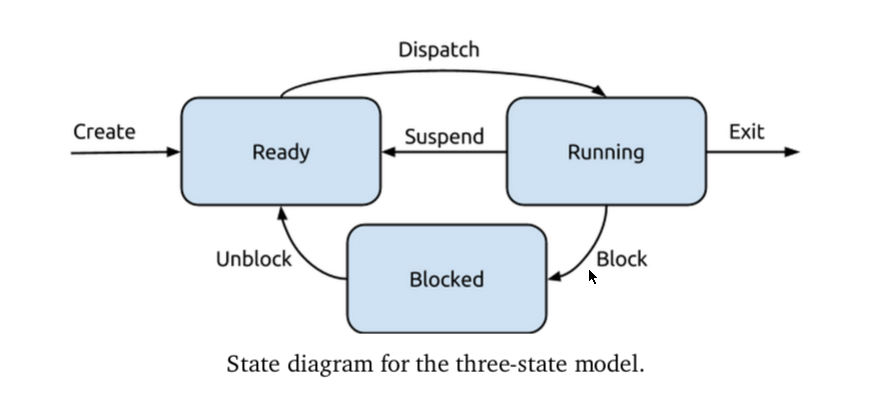

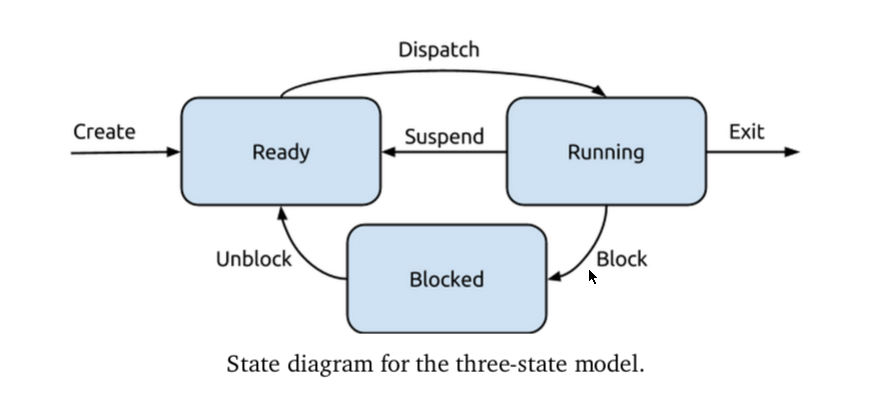

Three State Model

-

possible states:

-

running: actively executing

-

ready: not running, ready to run

-

blocked: not running, not ready until something happens

-

scheduler will never choose a blocked process to run

-

e.g. Say process p is waiting for user input. p is in blocked state. When user enters input, interrupt handler runs and sets state to ready

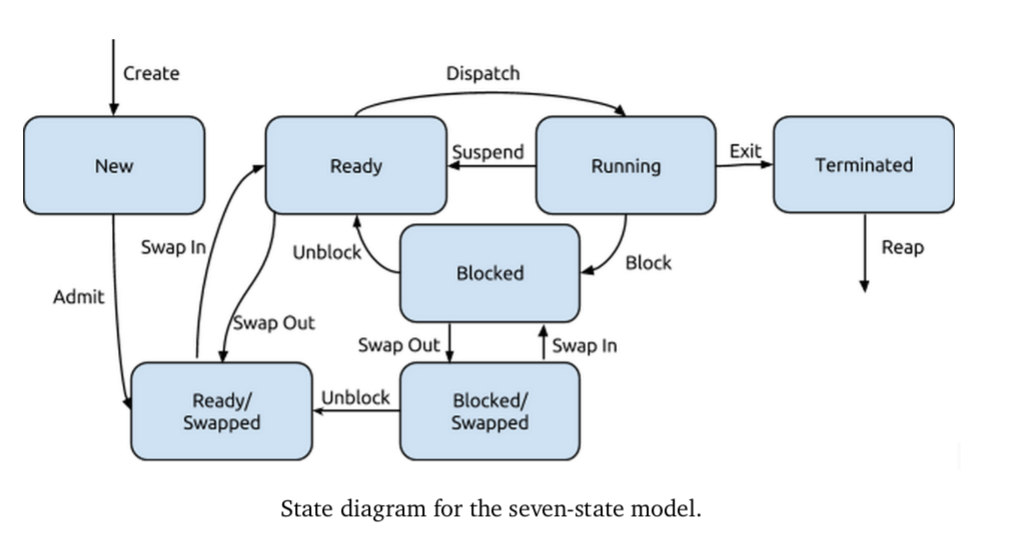

Five State Model

-

possible states:

-

running: actively executing

-

ready: not running, ready to run

-

blocked: not running, not ready until something happens

-

new: just created, not yet added to list of processes to run

-

terminated: finished executing, not yet cleaned up

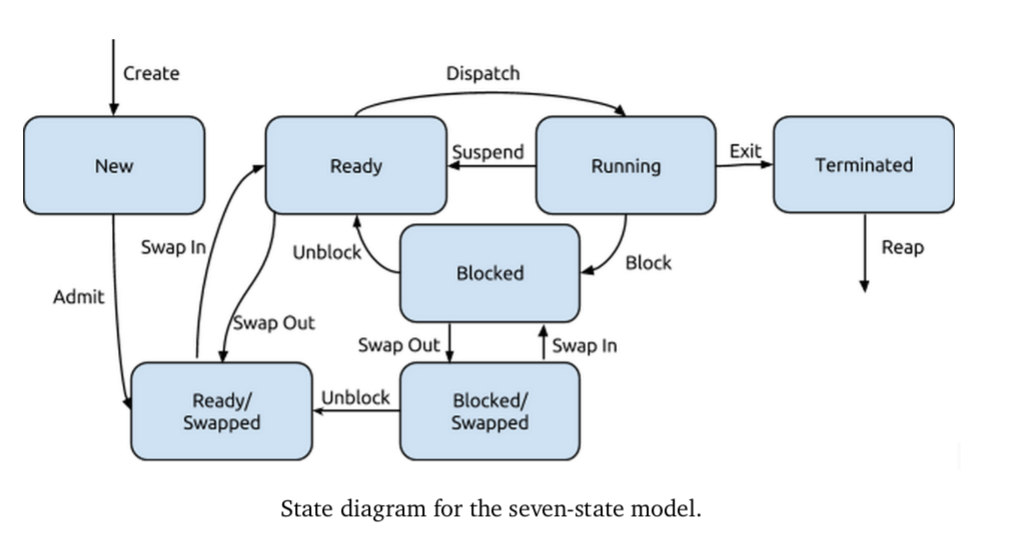

Process on Disk: Seven State Model

-

problem: user might want to run more processes than we have memory for. solution:

-

a process might be swapped out to disk rather than memory

-

need states to recognize process in memory / process in disk

-

by default, process starts out in disk

2016/05/13

L06: Processes in UNIX

-

pid

: process ID

-

interactive vs non-interactive processes

-

zarnett talks about screen and tells you to make multiple screens for different commands. Zarnett is

wrong

-

ctrl+a+c for new windows within session

-

ctrl+a+p, ctrl+a+n for switching between windows

-

boom, screen so much more fucking useful

-

child get resources from parents.

cgroups

can limit process child to x% of resources

-

parent spawns child with

fork

-

uses

wait

to wait for the child return code (not necessary)

-

fork

creates an exact duplicate of parent

-

return value of

fork

tells if parent (positive value,

pid

of child) or child (0 code)

-

child will run

exec

, loads binary file into memory and begin execution

Fork Bomb

-

process that forks itself - each child will also fork itself

-

can overwhelm system with processes

-

ways to limit damage:

-

limit how many processes can exist

-

rate limit creation of processes

L07: Inter-process Communication

-

IPC: communication between 2+ processes that want to co-ordinate/exchange data

-

the OS provides facilities to allow this data

-

processes must have an agreement on:

-

what data a message should contain

-

the way the data is formatted

-

can be synchronous or asynchronous

-

send and receive can be either

Implementation Strategies

-

shared memory

-

the file system

-

message passing

Shared Memory

-

part of memory is designated as being shared between multiple processes, all of whom can rw to it

-

since shared, one process can overwrite changes

-

need a mechanism for co-ordination

File System

-

store messages in the file system

-

persistent

-

can also be used when sender/receiver know nothing about each other

-

still have problem of co-ordination

Message Passing

-

service provided by the OS

-

sender will give the message to the OS and ask it be delivered to a recipient

-

two basic operations: sending and receiving

-

messages can be of fixed or variable length

-

sender needs to know an identifier for another process

-

indirect communication: send message to mailbox, receive mail from mailbox

-

more flexible than identifying process directly

-

anyone can send to mailbox, only owning process can receive

Message Queue

-

use a pipe as a message queue, woo (in UNIX)

-

pipes are unidirectional

-

pipe(int fileDescriptors[])

-

where

fileDescriptors[0]

is the read-end,

fileDescriptors[1]

is the write-end

-

named pipe:

when pipe stored on disk; these are bidirectional

2016/05/16

L08: Threads

-

a process has at least one thread, can have many

-

thread: sequence of executable commands that can be scheduled to run on CPU

-

each thread has its own:

-

thread execution state (running, ready, blocked, etc.)

-

saved thread context

-

execution stack

-

local variables

-

access to memory of process (shared with all threads)

Motivation for Threads

-

faster than creating new process

-

easier to terminate/clean up

-

takes less time to switch

-

share memory space -> communicate directly, no IPC needed

-

allows program to be responsive when a part of the program is blocked

Thread Drawbacks

-

no protection between threads in same process

-

one thread can easily mess with memory

-

if any thread encounters an error, all threads can be terminated by the OS

Thread States

-

each thread has its own state

-

uses the five state model

Thread Cancellation

-

two ways to be cancelled:

-

asynchronous:

one thread immediately terminates the other

-

deferred:

target is informed it is cancelled; target checks for this information, cleans itself up if it has been terminated

-

thread can ignore deferred cancellation, but it is useful when resources need to be properly cleaned up

Thread Types

-

user-level threads (ULTs):

run at the user level

-

OS does not need to support threads; can use a thread library

-

kernel-level threads (KLTs):

run at the kernel level

-

3 possible approaches to types:

-

all threads are user level

-

all threads are kernel level

-

a mix of both approaches

Advantages of ULT

-

thread switches do not require kernel mode privileges; do not have to switch to kernel mode every time

-

scheduling can be decided by program, not OS

-

portability - kernel does not have to support threads

Advantages of KLT

-

no problem with one thread blocking -> all threads blocking

-

(can be a problem with ULT,

jacketing

exists to stop that. Replaces a blocking system call with a non-blocking call from the library)

-

kernel routines can be multi-threaded

The Many-to-One Model

-

ULT-only model

-

many ULTs map to one KLT

-

thread management is done in user space

The One-to-One Model

-

KLT-only model

-

the creation of a new user level thread automatically creates a new KLT

-

approach that Linux and Windows uses

The Many-to-Many Model

-

maps ULTs to a smaller/equal number of KLTs

-

provides maximal concurrency in executing a single program

The One-to-Many Model?

-

DOES NOT EXIST

-

makes no sense for a ULT to be mapped to multiple KLTs

-

ULTs only need KLTs some of the time, having multiple KLTs waiting around is wasteful

L09: POSIX Threads

-

pthread

POSIX standard tells us how threads should work on UNIX OS

-

important calls:

-

pthread_create

: Create a new thread; analogous to

fork

-

pthread_exit

: Terminating the calling thread. Analogous to

exit

, has return value

-

pthread_join

: Wait for another thread to exit. Analogous to

wait

- the caller cannot proceed other thread exists

-

pthread_yield

: Release the CPU, let another thread run.

-

pthread_attr_init

: Create and initialize a thread’s attributes. Contains info like priority of thread

-

pthread_attr_destroy

: Remove a thread’s attributes. Free up the memory holding the attribute info.

-

pthread_cancel

: Signal cancellation to a thread. Can be asynchronous or deferred, depending on thread attributes

Thread Creation in Practice

pthread_create(pthread_t *thread,

const pthread_attr_t *attributes,

void *(*start_routine)(void *),

void *argument);

-

where:

-

thread

is a pointer to

pthread

identifier and will be assigned when the thread is created

-

attr

may contain various characteristics (can supply NULL for defaults)

-

start_rountine

is any function that takes a single untyped pointer and returns an untyped pointer

-

arguments

is the argument passed to

start_routine

-

can be limiting to have just one function parameter

-

can define a structure, pass a pointer to that struct to the function

Thread Attributes

-

indicate the following attributes of a thread:

-

detached or joinable state

-

scheduling data (policy, parameters, etc.)

-

stack size

-

stack address

-

by default, new threads are joinable. Can be started in a detached state to stop it from ever being joined

Fork

and

Exec

-

when

fork

is run, it makes a copy of all the threads. The child may then call

exec

, and throw all away the threads

-

can have 2 different implementations of

fork

:

-

one that will duplicate all threads

-

one that will duplicate only the executing thread

UNIX Signals

-

UNIX systems use signals to indicate events

-

are essentially interrupts with an integer id

-

synchronous signals:

signal occurs as a result of program execution (e.g. dividing by zero)

-

asynchronous signals:

signal comes from outside the process (e.g. Ctrl+C)

-

in a multi-threaded program - which thread to send signal to?

-

deliver to the thread to which the signal applies

-

deliver to every thread

-

deliver to certain threads in the process

-

assign a specific thread to receive all signals

-

sync signals will always be sent to the thread that triggered the signal, but async signals can be handled in any of the 4 ways

Thread Cancellation

-

pthread_cancel

: takes one parameter, the thread ID. Set up for deferred cancellation by default

-

pthread_testcancel

: check if thread has been cancelled

L10: Symmetric Multiprocessing (SMP)

-

A SMP system is a stand-alone computer with the characteristics:

-

>=2 general processors

-

processors share the same main memory and I/O devices and are interconnected by a bus

-

processors are capable of performing the same functions

-

system is controlled by an OS that provides interaction between processors and their programs

Time Slicing

-

CPU switches between tasks real quickly to make it seem like processes are executing in parallel

Parallelism and Speedup

-

fully parallelizable: splitting up task can double speed of execution

-

partially parallelizable: splitting up task can improve speed of execution; <2 times

-

non-parallelizable: splitting up task does not improve speed at all

Amdahl’s Law

$$ speedup \leqslant \frac{1}{S + \frac{1-S}{N} } $$

- can take limit to infinity and see what speedup converges on

L11: Concurrency: Synchronization and Atomicity

-

serialization:

want to ensure that certain events take place before other certain events

-

mutual exclusion:

certain events must not happen at the same time

-

concurrent:

two events are concurrent if we cannot tell by looking at the program which will happen first

-

order might change => nondeterminism

-

atomic operation:

an operation that is incapable of being interrupted; non-atomic operations leads to race conditions and sadness

Serialization through Messages

-

if event B has to wait for event A to finish before starting, having event A send a message to B when done

Mutual Exclusion Through Flags

-

critical section:

a section of code that should only be accessed by at most one thread at a time

-

protect them with mutexes (mutual exclusion)

-

make critical sections as short as possible

Test and Set

-

how to implement mutual exclusion?

-

hardware solution - Test and Set!

-

special machine instruction that is performed in a single instruction cycle (therefore not interruptible)

-

test and set returns a boolean value

-

will examine the flag variable and if 0, will set to 1 and return true. If set to 1, will return false

L12: Semaphores

Mutual Exclusion through Messages

-

more useful properties than just one thread being in the critical thread at a time

-

mutual exclusion must apply

-

a thread that halts outside the critical section must not interfere with other threads

-

not possible for a thread requiring critical section to be defined indefinitely

-

when no thread is in the critical section, thread requesting access should get it right away (no unnecessary waiting)

-

no assumptions are made about what the threads do or number of processors (general solution, not special case solution)

-

a thread remains inside the critical section for finite time

Semaphore

-

semaphore: generally, is a system of signals used for communication

-

binary semaphore: variable that can be 0 or 1

-

has two operations:

wait

and

signal

-

wait

: how to enter critical section; if =1, set to 0 and enter thread; if =0, block

-

signal

: if =1 when called, do nothing; if =0 when called and a task is blocking, unlock task; otherwise set to 1

-

no facility to “check” its value. You just call

wait

and what will happen, will happen

-

in this definition, nothing stops another thread from signalling (not the thread using the critical thread), letting threads in the critical section

Mutex

-

mutex: binary semphore with one additional rule: only the thread that called

wait

can call

signal

Counting Semaphores

-

counting/general semaphore: instead of 0 or 1, setup takes an int and that’s the max value.

-

waiting thread will decrement by 1

-

signalling thread will increment by 1

-

if decrementing would make semaphore <=0, is blocked

L13: Synchronization Patterns

-

common sync patterns that semaphores can be used to solve

-

ways of coordinating 2 threads/processes

Signalling

-

tell someone you did a thing

-

semaphore sem, initialized to 0

-

ensures that statement A1 will occur before statement B2

Thread A

1. Statement A1

2. signal(sem)

Thread B

1. wait(sem)

2. Statement B2

Rendezvous

-

expansion of signalling so that it works both ways: tell someone you did a thing and they tell you you did a thing and then you’re at the same place

-

in the following: A1 and B1 happen before A2 and B2

Thread A Thread B

1. Statement A1 1. Statement B1

2. signal(aArrived) 2. signal(bArrived)

3. wait(bArrived) 3. wait(aArrived)

4. Statement A2 4. Statement B2

Mutual Exclusion

-

in the following: mutex initialized to 1, first thread to arrive doesn’t block on wait

Thread A Thread B

1. wait(mutex) 1. wait(mutex)

2. critical section 2. critical section

3. signal(mutex) 3. signal(mutex)

Multiplex

-

same as mutex pattern with

n

threads allowed in critical section at a time

Barrier

-

generalization of rendezvous where

n

threads need to meet up

-

useful to have a variable to keep track of how many threads are in the right place (since it’s shared data, should be protected as a critical section)

Thread K

1. wait(mutex)

2. count++

3. signal(mutex)

4. if count == n

5. signal(barrier)

6. end if

7. wait(barrier)

8. signal(barrier)

-

n

th thread will unlock the semaphore on line 5, every other thread will signal another thread at line 8

The Reusable Barrier

-

in the barrier pattern, count can not decrease so it’s an one-time use barrier

Thread K

1. wait(mutex)

2. count++

3. if count == n

4. wait(turnstile2)

5. signal(turnstile1)

6. end if

7. signal(mutex)

8. wait(turnstile1)

9. signal(turnstile1)

10. [critical section]

11. wait(mutex)

12. count--

13. if count == 0

14. wait(turnstile1)

15. signal(turnstile2)

16. end if

17. signal(mutex)

18. wait(turnstile2)

19. signal(turnstile2)

-

above is a

two-phase barrier

, because all threads have to wait twice, once at each turnstile

L14: Classical Synchronization Problems

Producer-Consumer Problem

-

n

processes share a common buffer that is of fixed size

-

p

producers put data in,

c

consumers take data out

-

in order to keep track of the # of items in the buffer, we have a variable

count

Producer Consumer

1. [produce item] 1. wait(items)

2. wait(spaces) 2. wait(mutex)

3. wait(mutex) 3. [remove item from buffer]

4. [add item to buffer] 4. signal(mutex)

5. signal(mutex) 5. signal(spaces)

6. signal(items) 6. [consume item]

Readers-Writers Problem

-

concurrent reading and modification of a data structure or record by more than one thread

-

any number of readers may be in the critical section simultaneously

-

only one writer may be in the critical section (and when it is, no readers allowed)

Writer Reader

1. wait(turnstile) 1. wait(turnstile)

2. wait(roomEmpty) 2. signal(turnstile)

3. [write data] 3. wait(mutex)

4. signal(turnstile) 4. readers++

5. signal(roomEmpty) 5. if readers == 1

6. wait(roomEmpty)

7. end if

8. signal(mutex)

9. [read data]

10. wait(mutex)

11. readers--

12. if readers == 0

13. signal(roomEmpty)

14. end if

15. signal(mutex)

Dining Philosophers Problem

-

5 people at a table, 5 chopsticks

-

when they want to eat, they attempt to grab 2 nearest chopsticks (left, right). Then they can eat rice. When they’re done, put down the chopsticks

-

chopstick = binary semaphore

-

if they all try to eat at once, they all grab left chopstick. None can grab the right.

-

could protect entire table with a semaphore - only one can eat at a time. This works but suboptimal

-

can limit to 4 philosophers. k pigeonholes, k+1 pigeons - someone must have at least two pigeons

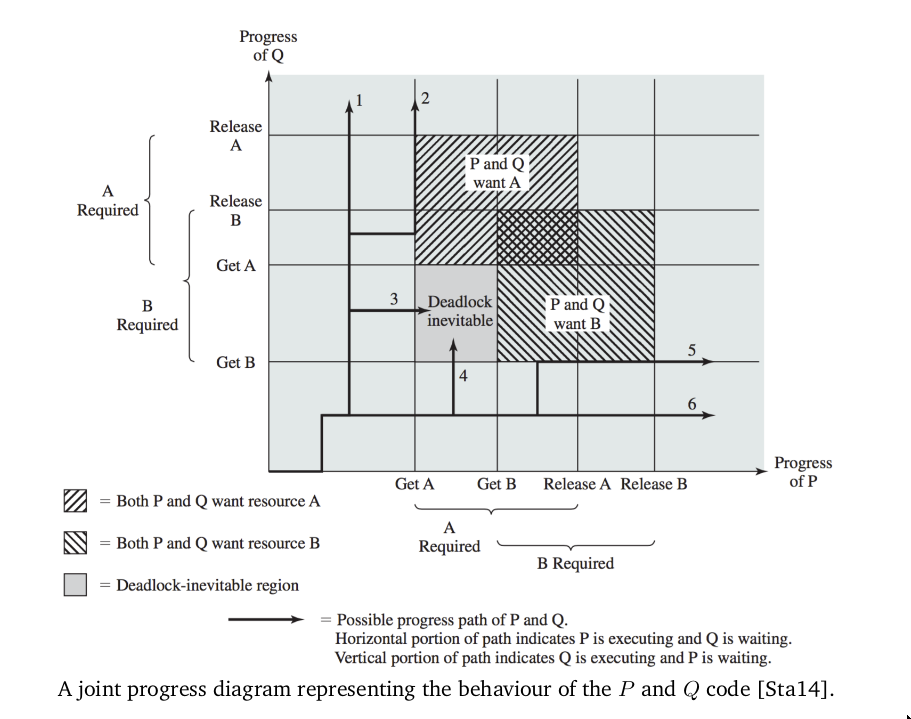

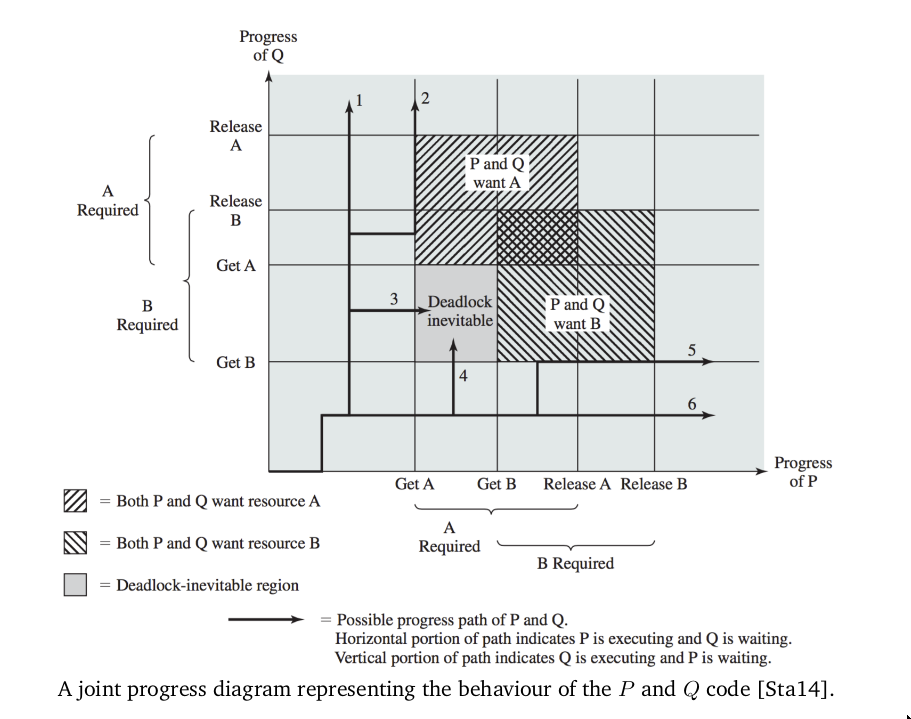

L15: Deadlock

-

permanent blocking of a set of processes that compete for system resources

-

only really deadlock when blocked, waiting for event that comes from another blocked process (therefore event will never happen)

-

deadlock example:

Thread P Thread Q

1. wait(a) 1. wait(b)

2. wait(b) 2. wait(a)

3. [critical section] 3. [critical section]

4. signal(a) 4. signal(b)

5. signal(b) 5. signal(a)

-

execution paths:

-

Q gets B and A, releases. P gets both and releases

-

Q gets B and A. P blocks on A. Q releases B and A. P gets A, B, releases.

-

Q gets B, then P gets A. Deadlock.

-

P gets A, then Q gets B. Deadlock.

-

P gets A, and then B. Q blocks on B. P releases A and B. Q gets B, A, releases.

-

P gets A and B, releases. Q gets both and releases.

-

fatal region = deadlock inevitable region

Reusable and Consumable Resource

-

reusable: can be used by one process at time, not depleted

-

consumable: created and destroyed upon consumption

Conditions for Deadlock

-

mutual exclusion: only one process can use at once

-

hold-and-wait: a process holding a resource may request more resources and be forced to wait for them

-

no preemption: a resource cannot be “taken” from the process that holds it

-

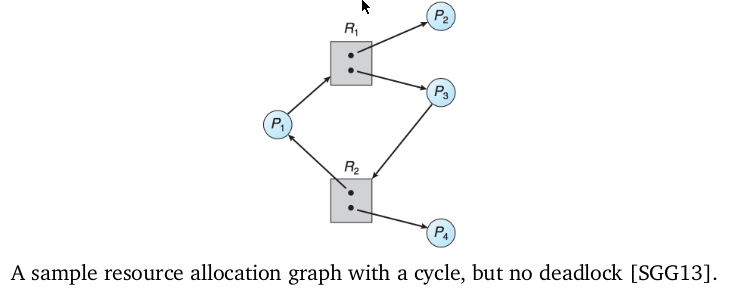

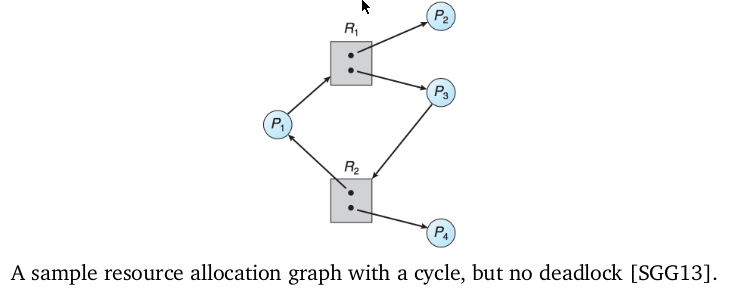

circular wait: a cycle in the resource allocation graph

Dealing with Deadlock

-

approaches:

-

ignore it

-

deadlock prevention

-

deadlock avoidance

-

deadlock detection

Ignore It

Deadlock Prevention

-

need to get rid of one of the conditions:

-

mutual exclusion

-

throwing out the baby with the bathwater

-

hold-and-wait

-

request all resources at once.

-

process needs to know all resources it needs at the beginning

-

process can’t start until it has all resources - will need to wait until it starts

-

process keeps the resources longer than it needs

-

release all resources when you need to request a new one, and then request all again

-

some things cannot be easily released (e.g. memory). Thus, cannot guarantee no deadlock will happen, just makes it less likely

-

two-phase locking

: process attempts to lock a group of resources; if it can’t, it’ll release them all and try again. -> works but doesn’t fit into our model of semaphores

-

no-preemption:

-

if process is waiting for a process and also holding resources, another process can take those resources and they’re added to the list of things they’re waiting for

-

for this to work, resource must be something whose state can be easily saved and restored -> some resources do not work like that

-

therefore not sufficient to get rid of deadlock, only makes it less likely

L16: Deadlock Avoidance

Ordering of Resources

-

resources given some order, they must be requested in that order

Stay Alert Stay Safe

-

process gives OS some info about resource they might need. OS tries to schedule things such that a deadlock cannot happen

-

OS needs information about how to pick a “safe” route

-

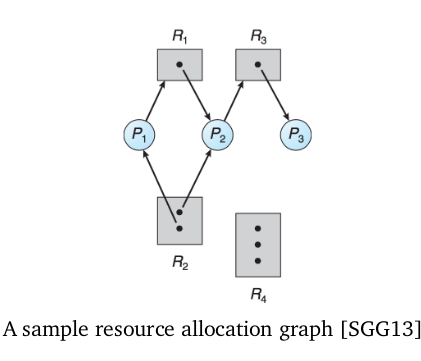

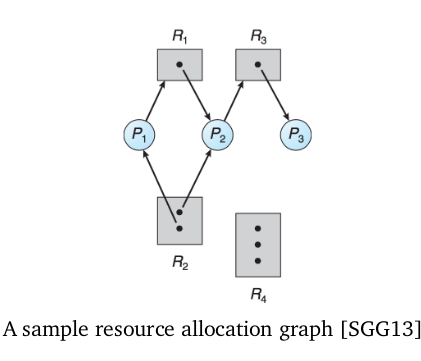

resource allocation diagram:

shows how many instances of resource all processes have, how many they need before finishing, how many are free

-

to see if a set of requests are safe, see if there is any path that guarantees all processes going to completion

-

a system being unsafe does not mean deadlock is certain - it is just possible

Resource-Allocation-Graph Algorithm

-

have OS maintain a resource allocation graph so it can avoid cycles

-

this works if there is only one instance of a resource and requires all resources be declared in advance

-

graph has 3 types of edges:

-

requests

-

allocation

-

claim (resource may request a resource)

-

allocation only allowed if it does not result in a cycle in the graph

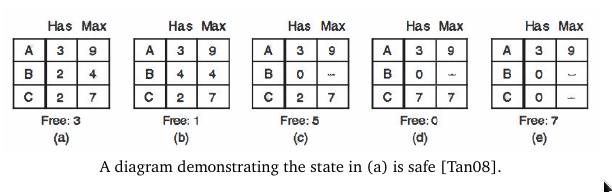

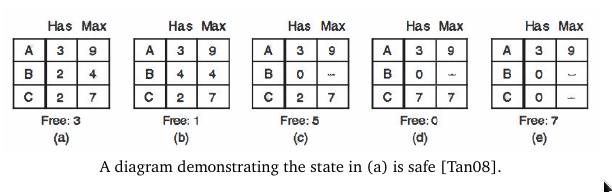

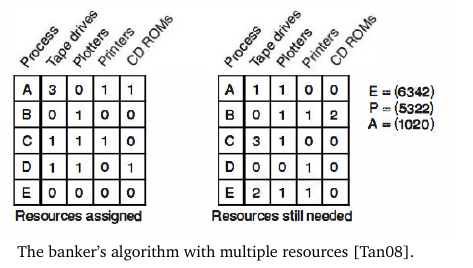

Banker’s Algorithm

-

OS will use the resource allocation diagrams from earlier to evaluate if any resource request will transition the system to an unsafe state

-

if it would, request will be denied or process will be blocked

-

resource allocation diagram can accommodate multiple resources, as below

-

informal method for check if state is safe:

-

Look for a row in matrix,

r

, where the unmet resource needs are less than or equal to available resources in A. If not such row exists => unsafe

-

Assume the process from

r

gets all the resources it needs. Mark as terminated, put all resources in A

-Repeat steps until a) all processes are marked terminated and state is safe or b) no process remains whose needs can be met and the state is unsafe

-

while useful in theory, in practice is useless since processes rarely know their max resource needs in advance and number of processes is not fixed

Other Recommendations

-

minimize # of critical sections and length

-

all tasks must release resources asap

-

do not suspend/block tasks in the critical section

-

all critical sections must be error-free

-

do not allocate resources in interrupt handlers. Could block, entire system could go down

-

always perform validity checks on pointers (or null checks) in critical sections. Seg fault or exception might mean never releasing the lock

L17: Deadlock Detection and Recovery

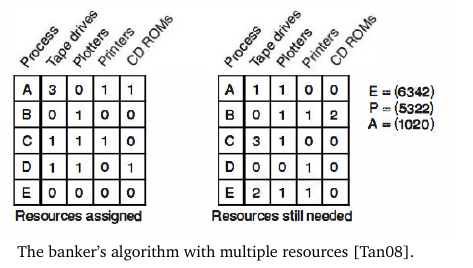

General Deadlock Detection Algorithm

-

n processes numbered P

1

through P

n

and m resources

-

resources represented by:

-

E, existing resource vector - total number of instances of each resource

-

A, available resource vector - how many instances available

-

use two matrices to represent current situation of system

-

C, current allocation: contains data about what resources assigned to each process

-

row

i

of C shows how many of each resource P

i

has

-

R, request matrix

-

row

i

of R shows how many of each resource P

i

wants

-

column j is a certain resource

$$ \sum_{i=1}^{n} C_ij + A_j = E_j $$

Algorithm (ϴ(m * n

2

)

-

Search for an unmarked process whose requests can all be satisfied with available resources in A

-

Find a process P

i

such that row R

i

<- A

-

If a process is found, add the allocated resources of that process to the available vector and mark the process.

-

A = A + C

i

. Go back to step 1

-

If no process was found in the search of step 1, algorithm terminates

When to Detect Deadlock

-

deadlock detection expensive to run - so when should we run?

-

could run every time a resource request cannot be granted (process gets blocked)

-

could run periodically

-

depends on:

-

how often we expect deadlock to occur

-

how severe a problem it is when deadlock occurs

Deadlock Recovery

-

manual strategy: someone is notified, responsible for

Robbery

-

preemption - after detection, OS preempts resources and gives them to other processes until deadlock is solved; resource must be able to save and restore its state for this to work.

Mass murder

-

kill all processes involved in the deadlock, all resources become available

-

may not solve the problem for reals because the circumstances may happen again

Murder

-

just kill one of the processes involved in the deadlock, see if that fixes the problem

Time Travel

-

rollback: returning the state of the process to a saved state from an earlier time

-

saved state = checkpoint

-

act of creating and saving a checkpoint = checkpointing

-

checkpoints may be created periodically or before beginning a particular operation that uses a lot of resources

-

sometimes rolling back does not save us from deadlock happening again

-

can attempt a few time before giving up and trying something else

Armageddon

-

sometimes the best thing to do is reboot the system

-

NASA’s spirit rover does this (lol)

Victim Selection

-

it is not necessary to choose a process involved in the deadlock

-

if P1 and P2 are deadlocked, P3 may have an instance P1 needs. Killing P3 will let P1 and P2 proceed

-

can think of this as an optimization problem - you can define a cost function for this, eval it, choose the lowest

-

some factors to consider:

-

the priority of the process

-

how long the process has been executing

-

how long is remaining in execution, if known

-

what resources the process has (number and type)

-

future resource requests, if known

-

whether the process is user-interactive or in the background

-

how many times, if any, the process has been selected as a victim

-

tends to favour older processes to persevere over younger

-

less work is lost

-

if oldest process is always selected, they might starve and never run to completion

-

keeping track of victim selection helps prevent starvation

Miscarriages of Justice

-

deadlock detection algorithm tends to be conservative in that it will err on the side of saying that there is a deadlock

-

the algorithms do not have to be perfect if we have chosen an appropriate recovery strategy

L18: Concurrency and Synchronization in POSIX

Concurrency with

pthreads

-

pthread

routines to deal with mutual exclusion

-

pthread_mutex_init

:

-used to create a new mutex variable and returns it with type

pthread_mutex_t

-

takes an optional param, the attributes or can initialize with null or with the default initializer:

-

pthread_mutex_t mymutex = PTHREAD_MUTEX_INITIALIZER;

-

when created, the mutex is by default unlocked

-

pthread_mutex_unlock

: unlocks selected mutex

-

pthread_mutex_lock

: blocking lock function

-

pthread_mutex_trylock

: non-blocking lock function (get back a result that tells you if you got the lock or not)

-

attempting to unlock a mutex that is not currently locked is a error, or if you attempt to unlock a mutex owned by another

-

pthread_mutex_destroy

: cleans up the mutex, destroys it.

-

may fail if mutex is locked - attempting to destroy a locked mutex will result in “undefined behaviour”

Condition Variables

-

condition variable allows synchronization based on the value of the data

-

think of condition variable as an “event”, similar to but different than a counting semaphore

-

pthread_cond_init

: creates a

pthread_cont_t

-

pthread_cond_destroy

: to destroy them

-

condition variables must be paired with a mutex

-

will release the mutex while it waits for the condition

-

when the condition is true, the mutex is automatically locked and the thread can proceed

-

mutex should be unlocked when done with it

pthread_cond_wait

: takes the condition variable and the mutex. Performs the above behaviour

-

pthread_cond_signal

: signals a provided condition variable

-

thread_cond_broadcast

: signals all threads waiting for the condition variable

Monitors

-

can be made using condition variables

-

objective of the monitor is to make it so programmers do not need to code the synchronization part of their code directly

-

example:

synchronized

keyword in Java (used to ensure only one thread can be inside a method/block at a time)

Concurrency Mechanisms in UNIX

-

UNIX has a general semaphore

-

maintains the following data:

-

value of semaphore

-

process ID of last process to access it

-

# of processes waiting on a number > than the current value

-

# of processes waiting for the value to be 0

-

created in sets of 1 or more

-

sem_op

: system call for semaphore operations

-

positive - ++ value of semaphore, wake up processes waiting

-

0 - if current value is 0, do nothing. else, block until it’s 0 and increment # of processes waiting for it be 0

-

negative and its absolute value < current value - add

sem_op

to the current value

-

negative and its absolute value > current value - block until value is increased, ++ # of processes waiting for value > current value

Linux Kernel Concurrency Mechanisms

Atomic Operations

-

kernel provides operations that are guaranteed to executive atomically

-

two types: those that operate on ints, those that operate on a single bit in a bitmap

-

there is a defined type

atomic_t

, ensuring that only atomic operations can be used on this data type and vice versa

Spinlocks

-

way to implement constant checking for a lock

-

implemented as an int. If int=0, lock by setting to 1. If int=1, check its value again

-

this is pretty inefficient lol except for when waiting would take less time than context switching

-

reader-writer spinlock: allows multiple readers but gives exclusive access to a writer

-

implemented as a 24-bit reader counter and unlock flag, with the meaning defined as:

|

Counter

|

Flag

|

Interpretation

|

|

0

|

1

|

The spinlock is released and available

|

|

0

|

0

|

The spinlock has been acquired for writing

|

|

n (n>0)

|

0

|

The spinlock has been acquired for reading by n threads

|

|

n (n>0)

|

1

|

Invalid state

|